Event Logging configuration

This page contains third-party references. We strive for our content to always be up-to-date, however, the content referring to external vendors may change independently of Omada. If you spot any inconsistency, please report it to our Helpdesk.

The logging framework provides many log entries that can be directed to several log targets.

In the default configuration, all logs except authentication-related ones go through the Omada Logging Framework. When the logging framework is enabled, a high number of logs is created. If you need to limit the number of logs for capacity reasons when performing Omada Identity upgrade, disable the logging framework for the initial load using the Enable system event logging customer setting.

In the logging framework, the log of successful logons covers only:

- OpenID

- SAML

- Forms logon

Currently, the logging framework does not log successful logons for:

- Active Directory SSO

- Basic Authentication

Expand the following sections to see the list of event types and categories logged:

Omada Logging Framework event types

| Event | Event ID | Message |

|---|---|---|

| ServerConfiguration | 10002 | Failed loading code assembly: {fileName} |

| ServerConfiguration | 10002 | Error reading configuration object secrets from Vault Service |

| ServerConfiguration | 10002 | ServerConfiguration convert error ({key}) |

| ServerConfiguration | 10002 | ReportViewerException: {ex.Message}. |

| LicenseError | 10003 | GetLicenseInfo |

| LicenseError | 10003 | License |

| ExecuteTimer | 10005 | Exception occurred when executing dataexchange |

| ExecuteTimer | 10005 | ExecuteDataObjectEventActions |

| ExecuteTimer | 10005 | ExecuteProcessEventAction |

| ExecuteTimer | 10005 | Execute Timer: {timerId}. |

| ExecuteTimer | 10005 | Execute Timer: {timerId} Failed. |

| ObjectNotFound | 10100 | Cannot display object with ID {dataObjectId} either because it does not exist or because you do not have access to it |

| FailureAudit | 10400 | Audit Failure for {customer}\{userName}. The password is invalid. |

| FailureAudit | 10400 | Audit Failure for {customer}\{userName}. The user is not found |

| SamlResponseFailed | 10401 | SamlResponse not base64 encoded |

| SamlResponseFailed | 10401 | SAMLResponse XML invalid: {responseXml} |

| SamlResponseFailed | 10401 | SAMLResponse not valid according to schema |

| SamlResponseFailed | 10401 | SAMLResponse InResponseTo not valid: {requestId} |

| SamlResponseFailed | 10401 | SAMLResponse audience not valid |

| SamlResponseFailed | 10401 | SAMLResponse expired |

| SamlResponseFailed | 10401 | SAMLResponse signature reference cannot be validated |

| SamlResponseFailed | 10401 | SAMLResponse signature cannot be validated |

| OpenIDResponseFailed | 10402 | Token is not a well-formed JWT |

| OpenIDResponseFailed | 10402 | SecurityToken is not a JWT. |

| OpenIDResponseFailed | 10402 | Error occurred validating JWT. |

| OpenIDResponseFailed | 10402 | Failed to discover openid configuration from {endpoint} |

| OpenIDResponseFailed | 10402 | {message} |

| FailureAudit | 10403 | Failed to set new password |

| FailureAudit | 10404 | Audit Failure for {customer}\{userName}. The user is inactive. |

| FailureAudit | 10405 | Audit Failure for {customer}\{userName}. Too many logon attempts ({logonAttempts}). The user has been deactivated. |

| FailureAudit | 10406 | Audit Failure for {customer}\{userName}. The password has expired ({userInfo2.LastPasswordChange.ToString("g")}). |

| SamlConfiguration | 10407 | SAML certificate with serial {IdpCertSerialNo} not found |

| SamlConfiguration | 10407 | SAML certificate does not have private key and will not be used for signing the logout request |

| SamlConfiguration | 10407 | Failed to discover SAML configuration from {endpoint}. Reverting to legacy configuration. |

| LogConfiguration | 10408 | GetConfigurationData |

| LogConfiguration | 10408 | Error in log configuration |

| LogConfiguration | 10408 | Unable to set up logging of changes to property {sysname}. Property does not exist. |

| SuccessAudit | 10409 | Logon successful for {customer}\{tbUserName.Text} |

| Logoff | 10410 | Logoff initiated for {customerName}\\{AppIdentity.Identity.UserInfo.UserName} |

| ClientAuth | 10420 | Unexpected error generating token. |

| CodeMethodException | 10500 | Tabular Model processing failed after {totalSeconds} seconds. |

| CodeMethodException | 10500 | StartImportProfile failed |

| CodeMethodException | 10500 | CheckForPotentiallyStateImport failed |

| CodeMethodException | 10500 | Survey loaded by id:{surveyId} and key {sourceKey} is null |

| CodeMethodMalConfiguration | 10502 | Activity: {activityToReassign.Id} was not assigned due to unmap of the assignee group for the specified tag |

| CodeMethodValidation | 10503 | CodeMethod {method} ({actionId}) on EventDefinition {eventDefinitionName} ({eventDefinitionId}) failed: {errorMessage} |

| AccessModifierValidation | 10504 | AccessModifier on {parentType} {parentName} is obsolete: {accessModifierClassInfoName} |

| AccessModifierValidation | 10504 | AccessModifier failed on {parentType} {parentName}: {e.Message} |

| AccessDataUploadError | 10505 | OIM_AccessDataUploadHandler |

| ReferencedObjectDeleted | 10506 | Referenced object name not found ({dataObjectId}) |

| XmlSchemaFileUpdateError | 10550 | XML Schema file path not found: "{path}" |

| XmlSchemaFileUpdateError | 10550 | Error loading xml schema: "{path}": {ex.Message} |

| DatabaseScriptExecute | 10601 | Error loading SystemUpdateActions |

| DatabaseScriptExecute | 10601 | The following script failed to execute: {cmdStr} |

| AppStringsImported | 10602 | ImportConfigurationChanges - UpdateAllAppStrings |

| UpdateActionAssemblyError | 10603 | Cannot resolve types in assembly "{assembly.CodeBase}" |

| UpdateActionObjectUpdated | 10605 | Error Updating the Omada Identity System Default Queries |

| UpdateActionDataError | 10606 | Failed to migrate account resource: {accountResource.DisplayName} |

| UpdateActionDataError | 10606 | Error enabling Identity Queries for the Omada Identity System. |

| UpdateActionDataError | 10606 | Error Updating the Omada Identity System Default Queries. |

| MailTooManyReceivers | 10700 | Refuse to send event mails as there are more than {maxReceivers} receivers (eventdef.id: {eventDef.Id} actionid: {action.Id} actionobj.id {actionObject.Id} transitionid: {transitionId} receivers: {receivers.Count}" |

| MailTooManyReceivers | 10700 | Refused to send event mails as there are more than {maxReceivers} receivers ({receiverUserIds.Count}) |

| MailNoValidEmailAddress | 10701 | Mail receiver does not have a valid email address |

| Mail sent failure | 10702 | Could not send mail |

| MailCannotBeQueued | 10703 | MailController.SendMail() |

| MailCannotDecryptVar | 10704 | DecryptFields |

| MailCannotRemoveMailFromQueue | 10705 | Could not remove mail from queue |

| MailCannotAddMailLog | 10706 | Failing to add mail log ({mailId}) |

| MailCannotAddMailLog | 10706 | Failing to create mail log ({mailId}) |

| Mail sent | 10707 | Mail sent |

| MailConnection | 10708 | Error when loading the NotificationSettings ConfigurationData |

| MailCheckQueue | 10710 | TimerService.CheckMailQueue (Customer {customer.Id}) |

| MailSendMails | 10711 | TimerService.SendMailsFromQueue Queued: {queueCount} |

| ILMExportFailed | 10900 | UpdateDataObject failed with message: {e.Message} avp source is: {avpText} |

| WebRequest | 11001 | Failed to send email message to support. |

| SimulationHubRun | 12003 | RunViolationImpactSimulation |

| RunArchiveBatch | 12100 | ArchivingManager.DoExecute |

| WebserviceError | 13000 | Error occurred when trying to test if RoPE RemoteApi is alive |

| WebserviceError | 13000 | Cannot get last import status for system {sysId} |

| WebserviceError | 13000 | Cannot connect to Import service |

| WebserviceError | 13000 | Webservice exec error PushConfiguration() |

| WebserviceError | 13000 | Webservice exec error |

| WebPhishingAttempt | 13100 | not a local ULR |

| Password change | 13210 | Audit password change for {customerName}\\{AppIdentity.Identity.UserInfo.UserName} |

| PolicyCheckConfigurationError | 13350 | Configuration error in PeerAccessPolicyCheck |

| PolicyCheckExecutionError | 13351 | Error processing PeerAccessPolicyCheck |

| ODataError | 13400 | The user does not have read access for the 'Properties' Authorization role element |

| ODataError | 13400 | Error parsing ETag |

| ODataError | 13400 | BadRequestNoDataReceived |

| ODataError | 13400 | OData error |

| GraphQLError | 13500 | GraphQL query caused exception. |

| GraphQLError | 13500 | GraphQL query failed with errors. |

| GraphQLError | 13500 | Error deserializing value of {CustomerSettingKey.UiHomePageActions} |

| GraphQLError | 13500 | GraphQL error |

| DataExchangeError | 13600 | Error executing data exchange! |

| AjaxGridError | 14000 | DataProducerFactory - GetProducers error |

| SurveyFeatureInfo | 15000 | Survey data resend failed to complete for {Count} of {tasksLength} surveys. |

| SurveyFeatureInfo | 15000 | Survey data resend failed to complete |

| SurveyFeatureInfo | 15000 | Could not load survey template with id: {surveyTemplateId} |

| SurveyOwnershipNotAccepted | 15001 | Ownership not accepted for {dataObject.DisplayName} |

| ComplexDataSetBuilderError | 16000 | ComplexDataSetBuilderFactory - GetDataSetBuilders error |

| CrossDatabaseQueryDetected | 16001 | DataSource "{dataSourceName}" has one or more cross database joins which is not recommended from a performance and compatibility perspective |

| CrossDatabaseQueryDetected | 16001 | Survey "{surveyTemplate.Name}" has a data source "{dataSource.Name}" with one or more cross-database joins which is not recommended from a performance and compatibility perspective |

| KPIEvaluationError | 17000 | Error evaluating KPI '{kpi.Name}' ({kpi.Id}) counter and status |

| VirtualPropertyResolverError | 18000 | VirtualPropertyResolverFactory - GetVirtualPropertyResolvers error |

| Identity disabled | 23001 | A data object of type Identity was modified. Identity disabled |

| Identity locked | 23002 | A data object of type Identity was modified. Identity locked |

| Identity re-enabled | 23003 | A data object of type Identity was modified. Identity re-enabled |

| Request submitted | 23101 | A data object of type TRG_ACCESsREQUEST was created. Request submitted |

| Request approved | 23102 | A data object of type ResourceAssignment was modified. Request approved |

| Request rejected | 23103 | A data object of type ResourceAssignment was modified. Request rejected |

| Members added | 23201 | Memberships changed for user group "{UserGroup}". Members added ({Number}): {Identity} |

| Members removed | 23202 | Memberships changed for user group "{UserGroup}". Members removed ({Number}): {Identity} |

| Resource changed | 23203 | A data object of type Resource was modified. Resource changed |

| Approval configuration changed | 23204 | A data object of type ResourceFolder was modified. Approval configuration changed |

| Assignment policy changed | 23205 | A data object of type AssignmentPolicy was modified. Assignment policy changed |

| Constraint changed | 23206 | A data object of type Constraint was modified. Constraint changed |

| Prioritization policy changed | 23207 | A data object of type Prioritization policy was modified. Prioritization policy changed |

| Survey started | 23301 | A data object of type TRG_SURVEY was modified. Survey started |

| Survey completed | 23302 | A data object of type TRG_SURVEY was modified. Survey completed |

| System owner changed | 23303 | A data object of type System was modified. System owner(s) changed |

| System classification changed | 23304 | A data object of type System was modified. System classification changed |

| Identity created | 23305 | A data object of type Identity was created. Identity created |

| Identity modified | 23306 | A data object of type Identity was modified. Identity modified |

| Identity manager changed | 23307 | A data object of type Identity was modified. Identity manager(s) changed |

| Org. Unit manager changed | 23308 | A data object of type OrgUnit was modified. Org. unit manager(s) changed |

| New system onboarded | 23401 | A data object of type System was created. New system on-boarded |

| Technical identity requested | 23402 | A data object of type TECHIDENTREQUEST was created. Technical identity requested |

| Warehouse import succeeded | 23403 | A data object of type ODWIMPORTPROFILE was modified. Warehouse import successful |

| Warehouse import partially succeeded | 23404 | A data object of type ODWIMPORTPROFILE was modified. Warehouse import partially succeeded |

| Warehouse import failed | 23405 | A data object of type ODWIMPORTPROFILE was modified. Warehouse import failed |

Omada Logging Framework categories

Note, that some categories are used in more than one component. Such categories are listed as Duplicate.

| Component | Category | Description |

|---|---|---|

| Enterprise Server | ||

| AccessRequests | Anything related to requesting access, including review, approvals, denials, etc. | |

| Authentication | Logging in and out of Omada Identity, failed login attempts, wrong password used. | |

| Configuration | Configuration changes and issues such as errors in the system onboarding configuration, changes to views, properties, workflows, etc. | |

| Debug | Debug information, additional information to debug issues. | |

| Exception | When the code doesn't know what to do anymore. | |

| Governance | Reports generated, attestations, classifications, Risk, SoD matters. | |

| Password | Events related to password resets and filter. | |

| Security | Identity lock-out, Re-enable identities. | |

| SystemOperation | All the events regarding timers have executed, system events and processes have been running or failing. | |

| UserActions | Users performing searches, downloads. | |

| Mail related issues, mails being sent, not sent. | ||

| Identity | Lifecycle Requesting, adding, changing, disabling identities. | |

| Business Alignment | Create, update, delete roles, policies, contexts. | |

| Role and Policy Engine | ||

| Authentication | Logging in and out of Omada Identity, failed login attempts, wrong password used. | |

| Calculation | ||

| Configuration | Configuration changes and issues such as errors in the system onboarding configuration, changes to views, properties, workflows, etc. | |

| Data | Data that is processed in Omada Identity, e.g. identities, resources and accounts, but excluding configuration data. | |

| Debug | Debug information, additional information to debug issues. | |

| Exception | When the code doesn't know what to do anymore. | |

| Omada Data Warehouse | Data | Data that is processed in Omada Identity, e.g. identities, resources and accounts, but excluding configuration data. |

| Configuration | Configuration changes and issues such as errors in the system onboarding configuration, changes to views, properties, workflows, etc. | |

| Connection | Connection related events, e.g. URL, credentials, timeout. | |

| Progress | Progress of a running process or operation. | |

| Omada Provisioning Service | Debug | Debug information, additional information to debug issues. |

| SystemOperation | All the events regarding timers have executed, system events and processes have been running or failing. | |

| Configuration | Configuration changes and issues such as errors in the system onboarding configuration, changes to views, properties, workflows, etc. | |

| Provisioning | Everything concerning the actual provisioning. | |

| Performance | Logging containing elapsed time for an operation. | |

| Operation Data Store | CopsApiClient | The client that is responsible for retrieving data like environment information for a given environment identifier and ES ODS persistence service URL and its token from the COPS API service. |

| EnvironmentManager | Component collects, caches, and provides information about the environment. | |

| EventProcessingFunction | The component processes messages incoming from the event hub. For each type of message, an appropriate processor is selected that is responsible for handling the message. | |

| MessageSerializer | The component used to serialize and deserialize the collection of messages incoming from the event hub. | |

| ConnectionManager | The component handles database connection and transaction functionality and dispose them. | |

| ODSManager | The component provides ODS database operations: bulk insert, command and procedure execution, and supports transactions. | |

| AccessRequestMessageProcessor | The component responsible for processing received messages of type AccessRequestMessage by putting them in ODS. | |

| ApprovalQuestionMessageProcessor | The component responsible for processing received messages of type ApprovalQuestionMessage by putting them in ODS. | |

| CalculatedAssignmentMessageProcessor | The component responsible for processing received messages of type 'CalculatedAssignmentMessage' by putting them in ODS. | |

| ClearAccessRequestsMessageProcessor | The component responsible for processing received messages of type ClearAccessRequestsMessage by cleaning up access requests data from ODS. | |

| ClearSurveysMessageProcessor | The component responsible for processing received messages of type ClearSurveysMessage by cleaning up survey data in ODS. | |

| ResourceMessageProcessor | The component responsible for processing received messages of type ResourceMessage by putting them in ODS. | |

| SurveyMessageProcessor | The component responsible for processing received messages of type SurveyMessage by putting them in ODS. | |

| SystemMessageProcessor | The component responsible for processing received messages of type SystemMessage by putting them in ODS. |

Logging configuration

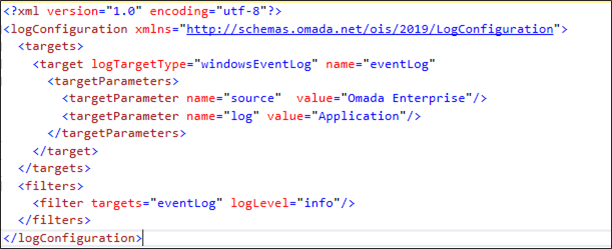

It is possible to configure various aspects of the logs which are captured by the Omada Identity using a dedicated XML configuration object. The default logging configuration is configured to send log entries to the Windows Event Log.

Changing the logging configuration will take immediate effect, but only for the website instance from where the change has been made. For that reason, other website instances, RoPE instances, and Timer Services instances must be restarted for the change to take effect.

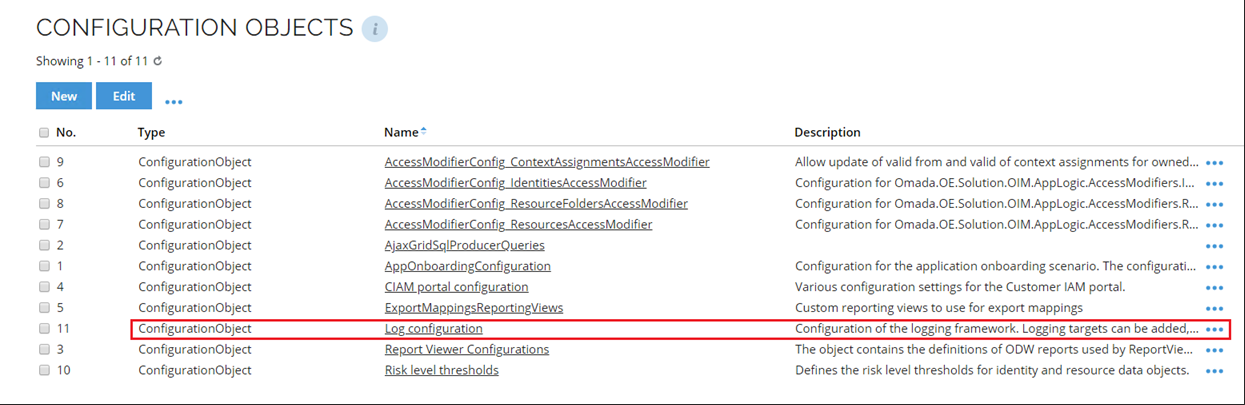

The logging configuration can be found in Setup > Administration > More > Configuration objects > Log configuration.

The Log configuration object allows you to define how logging is performed. The configuration XML consists of two main sections, which are targets and filters.

The targets section is used to configure where log messages should be sent to, and the filters section is used to configure the filtering of the log messages for the targets.

When adding log targets, be sure to also explicitly set filters for the configured target. Configuration of filters is required as the default logging level for targets is debug, which may result in extensive logging if left with the default value.

Filters

The Log filters allow for filtering specific properties of a log entry to determine which targets should receive the log entry. If a filter is defined for a particular target, only log entries that match the filter criteria will be sent. However, if there is no filter defined for a target, all log entries will be sent to that target regardless of their properties.

The following attributes from the log entry can be used in the filter:

-

Log level - filtering on log level means that only log entries with a log level greater than or equal to the filtered level are sent to the target.

- Available values are information, warning, error, fatal, trace, and debug.

-

Category - any text value.

-

Component - the name of the Omada Identity component from which the log entry origins.

-

Targets - a comma separated string of log target names to which the log entry should be sent if it passes the filter.

infoWhen adding log targets, be sure to also explicitly set filters for the configured target. Configuration of filters is required as the default logging level for targets is debug, which may result in extensive logging if left with the default value.

<filter category="Governance" targets="windowsEventLog,splunk"></filter>

The filter above will send all log entries with the category Governance to the log targets named eventLog and splunk.

Notice that these are the name of the targets as defined in the target configuration section, not the log target type.

Internal log file

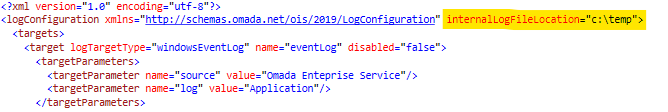

In case of any problems or errors related to the logging framework or configuration of the logging framework, you may need to inspect the internal log file of the logging framework.

The default path of the ES internal log files is:

C:\Users\{USERNAME}\AppData\Local\Temp

where {USERNAME} is the user name of the user running the a specific OI component.

You can change the default path of the internal log files by setting the internalLogFileLocation in the Log configuration data object.

Log targets on-prem

Log targets are used to display, store, or pass log messages to another destination. This destination could, for instance, be the Windows event log or a SIEM tool such as Splunk. It is possible to have the same target type configured multiple times in the configuration, but the name should be unique.

The following attributes are available for and apply to all log targets:

| Target attribute | Description |

|---|---|

| logTargetType | Available target types: windowsEventLog, splunk For logging into a file, use file as the log target. |

| name | Unique name for the target. |

| disabled | Specifies should the target be disabled. Possible values: true, false |

See sections below for information on the attributes specific to a different log target types.

Windows event log target

The windows event log target can be used to write log entries to the Windows event log. The target parameters are:

source- value to be used as the event Source.log- name of the Event Log to write to. This can be System, Application, or any user-defined name.detailedLogging- specified whether the log entries should contain details about the log event. The default value is false. If disabled, the log entries will only contain the timestamp, the message, and the exceptions. If enabled, all details from the log entry will be added. Possible values: true, false.

Example XML:

<target logTargetType="windowsEventLog" name="eventLog" disabled="false"><targetParameters>

<targetParameter name="source" value="Omada Enterprise"/>

<targetParameter name="log" value="Application"/>

<targetParameter name="detailedLogging" value="true"/>

</targetParameters>

</target>

By default, the entries in the Windows Event Log only contain the timestamp, the log message and the exception details (if it is an exception being logged) of a log entry. This can be changed by setting the detailedLogging parameter of the Windows Event Log targets to true. Changing this parameter will output all details of the log entry with a new line for each property and value of the log entry.

File log target

The File Log target can be used for writing log entries to a file. The target parameter is:

filename- the absolute or relative path to the file name. It is possible to include the current date as part of the file name by adding the following code in the filename:${shortdate}, for example:“C:\logs\OISLogs_${shortdate}.log”.

When using a relative path, each component in Omada Identity (ES, RoPE, OPS, and ODW) will create a file in the working directory of the component. For example, the log file for ES will be created in the website folder and the one for RoPE will be created in the services folder.

Thus, it is recommended that you refer to the absolute path instead, for example a file share that is accessible by all the servers where the Omada Identity components are installed.

Example of log target XML for file log target:

<target logTargetType="file" name="oisFileLog">

<targetParameters>

<targetParameter name="filename" value="C:\logs\OISLogs_${shortdate}.log"/>

</targetParameters>

</target>

Azure Log target

The Azure Log target can be used for writing log entries to Azure Log Analytics. You need the Workspace ID and the shared key from Azure Log Analytics to configure the log target. The following are target parameters:

workspaceid- the Workspace ID of the log analytics workspace in Azure.sharedkey- the Shared Key or Primary Key for the log analytics workspace in Azure.logname- the name of the custom log to save log entries in. Only letters are accepted. The log name in Azure Log Analytics will be postfixed with_CL.The log name is case-sensitive in Azure Log Analytics.

The following three parameters are only required if you wish to use the Event log list page in Enterprise Server to read the log entries from Azure Log Analytics. To configure these parameters, navigate to Setup -> Administration -> More… -> Configuration Objects and edit the Log Configuration object. In the log target named azureLog, configure the following parameters:

azuretenantid- the tenant ID for the Azure subscription.applicationid- the Azure AD application ID that is configured with the Log Analytics Reader access to the Azure Log Analytics workspace.clientsecret- the client secret from Azure AD.

For performance reasons, the credentials for the Azure Log Analytics workspace are cached for an hour. This means that changes to the azuretenantid, clientsecret, or applicationid parameters in the log configuration will not take effect before the authentication token has expired. To force a refresh of the credentials, perform a reset of the web server.

Retrieving Workspace ID and Shared Key from Azure Log Analytics

- In Azure, open Log analytics workspaces.

- Select the workspace.

- Select Agents management in the Settings section.

- Copy the values from the WORKSPACE ID and the PRIMARY KEY (Shared Key).

Example of log target XML for Azure Log Analytics:

<target logTargetType="azureLogAnalytics" name="azureLog">

<targetParameters>

<targetParameter name="workspaceid" value="0aac010e-9e0a-4d55-8d0d-49db2dc795c3" />

<targetParameter name="sharedkey" value="WY6A9UO6/uWNSSgeWz7qyfJRmibqNH78GqPrR1ptVkZsWN06GUjYrUZvyrS9wcXvMGoVb2YnpkXxJEzG7NNuPU+6Vndw==" />

<targetParameter name="logname" value="OISLogs" />

</targetParameters>

</target>

For more information, see the Microsoft documentation.

Splunk target

The Splunk target can be used for writing log entries to Splunk.

You can target both Splunk Enterprise as a target for the event logging. Both Splunk solutions use HEC, HTTP Event collector, and the parameters presented in the table below.

Splunk must be made available through a public IP address.

The following are the target parameters:

server- Splunk instance URL.token- token from the Splunk HTTP Event collector.channel- channel ID on the Splunk HTTP Event collector.ignoreSslErrors- ignore errors with SSL certificate. The default is true.includeEventProperties- include event properties. The default is true.retriesOnError- number of retries when an error occurs. The default is zero.

- Example XML for Splunk Enterprise solution

- Example XML for Splunk Cloud solution

<target logTargetType="splunk" name="splunk"> <targetParameters>

<targetParameter name="server" value="https://prd-p-example.splunk.com:8088"/>

<targetParameter name="token" value="8429944d-1ffb-4267-857b-302485015b56"/>

<targetParameter name="channel" value="8429944d-1ffb-4267-857b-302485015b56"/>

<targetParameter name="ignoreSslErrors" value="true"/>

<targetParameter name="includeEventProperties" value="true"/>

<targetParameter name="retriesOnError" value="0"/>

</targetParameters>

</target>

<target logTargetType="splunk" name="splunk" disabled="false">

<targetParameters>

<targetParameter name="server" value="https://prd-p-example.splunkcloud.com:<port>"/>

<targetParameter name="token" value="8429944d-1ffb-4267-857b-302485015b56"/>

<targetParameter name="channel" value="8429944d-1ffb-4267-857b-302485015b56"/>

</targetParameters>

</target>

The exact syntax of the URI for Splunk Cloud depends on a number of factors, for example, where it is being hosted or if you are using a free trial. For the most up-to-date details, see section Send data to HTTP Event Collector on Splunk Cloud Platform of HTTP Event Collector in Splunk Web. Remember that third-party content may change independently of Omada.

For Splunk, the parameter disabled is set to true by default. In order to correctly configure log targets, you need to change the value of the disabled parameter to false.

Configure import to use the new version of Newtonsoft.Json

The Nlog.Targets.Splunk is referencing the old version of Newtonsoft.Json. Therefore, you need to configure the import to use the new version of Newtonsoft.Json. To do so, follow these steps:

-

Locate on the SSIS server the

DTExec.exe.configthat is being used by the import.Should be in C:\Program Files\Microsoft SQL Server\140\DTS\Binn for a default installation location of SQL Server 2017.

-

Edit

DTExec.exe.config, and insert the code snippet into the<configuration><runtime>element.<assemblyBinding xmlns="urn:schemas-microsoft-com:asm.v1">

<dependentAssembly>

<assemblyIdentity name="Newtonsoft.Json" publicKeyToken="30ad4fe6b2a6aeed" culture="neutral" />

<bindingRedirect oldVersion="0.0.0.0-13.0.0.0" newVersion="13.0.0.0" />

</dependentAssembly>

</assemblyBinding> -

Save the file.

Setting up Splunk Enterprise HTTP Event collector (HEC)

To set up the HTTP Event collector for Splunk Enterprise solution, follow the steps below:

- Go to

https://localhost:8001(Splunk Enterprise solution installed on your machine). - Log in with the account information entered during installation.

- Click Settings -> Data -> Data inputs.

- Under Local Inputs you will find HTTP Event Collector. Select the Add new action on the right.

- Give the HEC a Name and click Next.

- In the Source Type, click Structured -> _json.

- As the App context, select splunk_httpinput.

- As the Index, select main.

- Click Review and Submit.

- Make a note of the created Token Value, this is the value you will need to configure Omada Identity with.

- Go back to Settings -> Data -> Data inputs.

- Click HTTP Event Collector and verify that your HEC has been added.

- Click the Global Settings button in the top right-hand corner.

- If you want to use HTTPS, check the Enable SSL box, otherwise, uncheck it.

- Select Enabled for All tokens, and then click Save.

Setting up Splunk Cloud HTTP Event collector (HEC)

- Go to https://splunk.com.

- Log in to your account using the user icon in the top right-hand corner.

- Click the user icon again and select Instances.

- From the list of instances select Access Instance.

- From the instance page select Settings -> Data -> Data inputs.

- Under Local Inputs you will find HTTP Event Collector. Select the Add new action on the right.

- Give the HEC a Name and click Next.

- In the Source Type, click Structured -> _json.

- As the App context, select splunk_httpinput.

- As the Index, select main.

- Click Review and Submit.

- Make a note of the created Token Value, this is the value you will need to configure Omada Identity with.

- Go back to Settings -> Data -> Data inputs.

- Click the HTTP Event Collector link and verify that your HEC has been added.

- Click the Global Settings button in the top right-hand corner.

- Select Enabled for All tokens, and then click Save.